I posted an image of this on my Dribble account the other day along with a similar bit of writing, but I thought I’d should really add a blog post about it here too as it’s one of my favourite devices, plus I’m trying to write a bit more frequently on my blog again.

The Nokia N-Gage is one of what I call the “Handheld Heroes”, these are 12 handheld devices that I consider to be icons of their time and are symbolic of a particular point of technological change in the last 30 – 40 years (ok, the pencil is quite a bit older than that ?). The N-Gage was released around 2003, so over 20 years old at the time of writing this(!).

The N-Gage was an amazing device and in many ways was ahead of its time. Long before Pokemon Go was a thing Nokia explored the idea of location-based / location-aware games, but mobile connectivity was very limited, very slow and very expensive at that time. I recall thinking about how amazing it would be to be able to walk around with a device and have a constant connection to the internet, but it was a long way off from the ubiquity of 4G / 5G today. So whilst there were a few location based games for the N-Gage this lack of affordable mobile data meant it had limited appeal.

Nokia Push was one of the coolest initiatives that they tried, basically there were two aspects, one for skateboarding and one for snowboarding. Both involved using sensors attached to the skateboard or snowboard and then tracking the telemetry such as rotations, flips, height, speed etc. For skateboarding the intention was that you could compete with someone in a different location in the world, both skaters could see from their phones which tricks the other had done:

For snowboarding the intention was to track telemetry such as height, speed, rotations, impact etc:

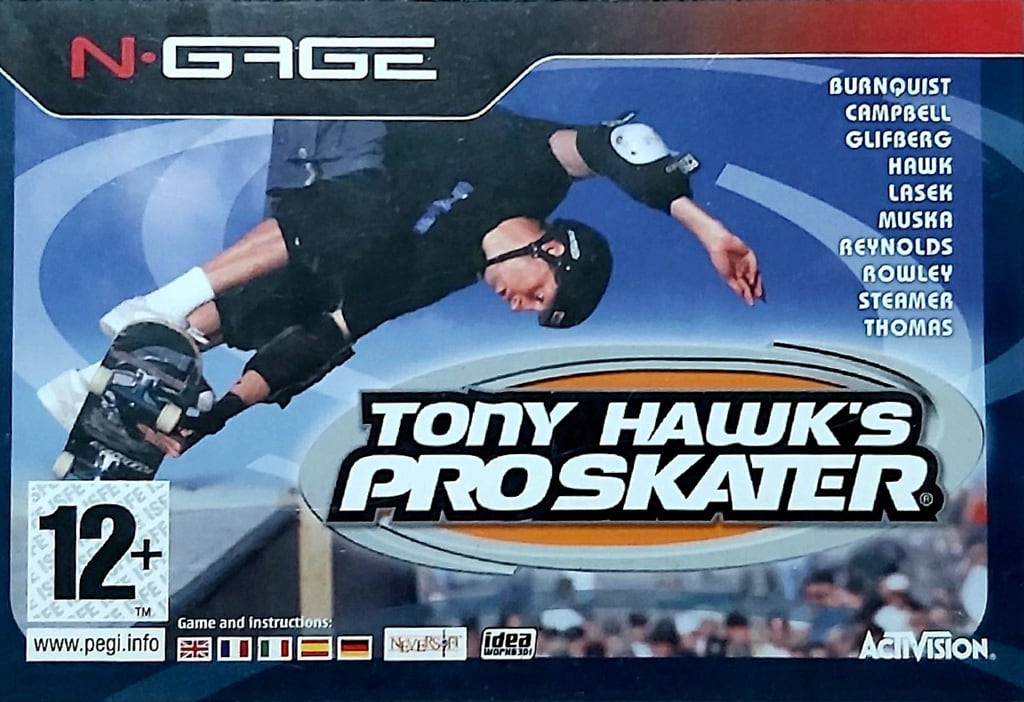

So the N-Gage wasn’t successful in location based gaming but it definitely had some of the seeds of what was yet to come, Nokia Push explored that even further. Yep, the N-Gage was a weird device to make calls on (especially the first version, see “Sidetalking!“), but talking was not what was really intended for, and I loved mine. What’s not to love about a phone that can play Tony Hawk’s Pro Skater?